Low-Code and the Democratization of Programming

Rethinking Where Programming Is Headed

In the past decade, the growth in low-code and no-code solutions—promising that anyone can create simple computer programs using templates—has become a multi-billion dollar industry that touches everything from data and business analytics to application building and automation. As more companies look to integrate low-code and no-code solutions into their digital transformation plan, the question emerges again and again: what will happen to programming?

Programmers know their jobs won’t disappear with a broadscale low-code takeover (even low-code is built on code), but undeniably their roles as programmers will shift as more companies adopt low-code solutions. This report is for programmers and software development teams looking to navigate that shift and understand how low-code and no-code solutions will shape their approach to code and coding. It will be fundamental for anyone working in software development—and, indeed, anyone working in any business that is poised to become a digital business—to understand what low-code means, how it will transform their roles, what kinds of issues it creates, why it won’t work for everything, and what new kinds of programmers and programming will emerge as a result.

Everything Is Low-Code

Low-code: what does it even mean? “Low-code” sounds simple: less is more, right? But we’re not talking about modern architecture; we’re talking about telling a computer how to achieve some result. In that context, low-code quickly becomes a complex topic.

One way of looking at low-code starts with the spreadsheet, which has a pre-history that goes back to the 1960s—and, if we consider paper, even earlier. It’s a different, non-procedural, non-algorithmic approach to doing computation that has been wildly successful: is there anyone in finance who can’t use Excel? Excel has become table stakes. And spreadsheets have enabled a whole generation of businesspeople to use computers effectively—most of whom have never used any other programming language, and wouldn’t have wanted to learn a more “formal” programming language. So we could think about low-code as tools similar to Excel, tools that enable people to use computers effectively without learning a formal programming language.

Another way of looking at low-code is to take an even bigger step back, and look at the history of programming from the start. Python is low-code relative to C++; C and FORTRAN are low-code relative to assembler; assembler is low-code relative to machine language and toggling switches to insert binary instructions directly into the computer’s memory. In this sense, the history of programming is the history of low-code. It’s a history of democratization and reducing barriers to entry. (Although, in an ironic and unfortunate twist, many of the people who spent their careers plugging in patch cords, toggling in binary, and doing math on mechanical calculators were women, who were later forced out of the industry as those jobs became “professional.” Democratization is relative.) It may be surprising to say that Python is a low-code language, but it takes less work to accomplish something in Python than in C; rather than building everything from scratch, you’re relying on millions of lines of code in the Python runtime environment and its libraries.

In taking this bigger-picture, language-based approach to understanding low-code, we also have to take into account what the low-code language is being used for. Languages like Java and C++ are intended for large projects involving collaboration between teams of programmers. These are projects that can take years to develop, and run to millions of lines of code. A language like bash or Perl is designed for short programs that connect other utilities; bash and Perl scripts typically have a single author, and are frequently only a few lines long. (Perl is legendary for inscrutable one-liners.) Python is in the middle. It’s not great for large programs (though it has certainly been used for them); its sweet spot is programs that are a few hundred lines long. That position between big code and minimal code probably has a lot to do with its success. A successor to Python might require less code (and be a “lower code” language, if that’s meaningful); it would almost certainly have to do something better. For example, R (a domain-specific language for stats) may be a better language for doing heavy duty statistics, and we’ve been told many times that it’s easier to learn if you think like a statistician. But that’s where the trade-off becomes apparent. Although R has a web framework that allows you to build data-driven dashboards, you wouldn’t use R to build an e-commerce or an automated customer service agent; those are tasks for which Python is well suited.

Is it completely out of bounds to say that Python is a low-code language? Perhaps; but it certainly requires much less coding than the languages of the 1960s and ’70s. Like Excel, though not as successfully, Python has made it possible for people to work with computers who would never have learned C or C++. (The same claim could probably be made for BASIC, and certainly for Visual Basic.)

But this makes it possible for us to talk about an even more outlandish meaning of low-code. Configuration files for large computational systems, such as Kubernetes, can be extremely complex. But configuring a tool is almost always simpler than writing the tool yourself. Kelsey Hightower said that Kubernetes is the “sum of all the bash scripts and best practices that most system administrators would cobble together over time”; it’s just that many years of experience have taught us the limitations of endless scripting. Replacing a huge and tangled web of scripts with a few configuration files certainly sounds like low-code. (You could object that Kubernetes’ configuration language isn’t Turing complete, so it’s not a programming language. Be that way.) It enables operations staff who couldn’t write Kubernetes from scratch, regardless of the language, to create configurations that manage very complicated distributed systems in production. What’s the ratio—a few hundred lines of Kubernetes configuration, compared to a million lines of Go, the language Kubernetes was written in? Is that low-code? Configuration languages are rarely simple, but they’re always simpler than writing the program you’re configuring.

As examples go, Kubernetes isn’t all that unusual. It’s an example of a “domain-specific language” (DSL) constructed to solve a specific kind of problem. DSLs enable someone to get a task done without having to describe the whole process from scratch, in immense detail. If you look around, there’s no shortage of domain-specific languages. Ruby on Rails was originally described as a DSL. COBOL was a DSL before anyone really knew what a DSL was. And so are many mainstays of Unix history: awk, sed, and even the Unix shell (which is much simpler than using old IBM JCLs to run a program). They all make certain programming tasks simpler by relying on a lot of code that’s hidden in libraries, runtime environments, and even other programming languages. And they all sacrifice generality for ease of use in solving a specific kind of problem.

So, now that we’ve broadened the meaning of low-code to include just about everything, do we give up? For the purposes of this report, we’re probably best off looking at the narrowest and most likely implementation of low-code technology and limiting ourselves to the first, Excel-like meaning of “low-code”—but remembering that the history of programming is the history of enabling people to do more with less, enabling people to work with computers without requiring as much formal education, adding layer upon layer of abstraction so that humans don’t need to understand the 0s and the 1s. So Python is low-code. Kubernetes is low-code. And their successors will inevitably be even lower-code; a lower-code version of Kubernetes might well be built on top of the Kubernetes API. Mirantis has taken a step in that direction by building an Integrated Development Environment (IDE) for Kubernetes. Can we imagine a spreadsheet-like (or even graphical) interface to Kubernetes configuration? We certainly can, and we’re fine with putting Python to the side. We’re also fine with putting Kubernetes aside, as long as we remember that DSLs are an important part of the low-code picture: in Paul Ford’s words, tools to help users do whatever “makes the computer go.”

Excel (And Why It Works)

Excel deservedly comes up in any discussion of low-code programming. So it’s worth looking at what it does (and let’s willfully ignore Excel’s immediate ancestors, VisiCalc and Lotus). Why has Excel succeeded?

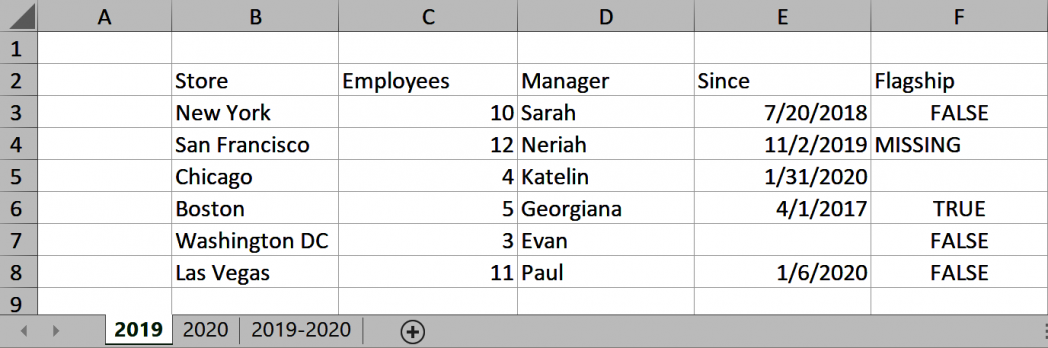

One important difference between spreadsheets and traditional programming languages is so obvious that it’s easily overlooked. Spreadsheets are “written” on a two-dimensional grid (Figure 1). Every other programming language in common use is a list of statements: a list of instructions that are executed more or less sequentially.

What’s a 2D grid useful for? Formatting, for one thing. It’s great for making tables. Many Excel files do that—and no more. There are no formulas, no equations, just text (including numbers) arranged into a grid and aligned properly. By itself, that is tremendously enabling.

Add the simplest of equations, and built-in understanding of numeric datatypes (including the all-important financial datatypes), and you have a powerful tool for building very simple applications: for example, a spreadsheet that sums a bunch of items and computes sales tax to do simple invoices. A spreadsheet that computes loan payments. A spreadsheet that estimates the profit or loss (P&L) on a project.

All of these could be written in Python, and we could argue that most of them could be written in Python with less code. However, in the real world, that’s not how they’re written. Formatting is a huge value, in and of itself. (Have you ever tried to make output columns line up in a “real” programming language? In most programming languages, numbers and texts are formatted using an arcane and non-intuitive syntax. It’s not pretty.) The ability to think without loops and a minimal amount of programming logic (Excel has a primitive IF statement) is important. Being able to structure the problem in two or three dimensions (you get a third dimension if you use multiple sheets) is useful, but most often, all you need to do is SUM a column.

If you do need a complete programming language, there’s always been Visual Basic—not part of Excel strictly speaking, but that distinction really isn’t meaningful. With the recent addition of LAMBDA functions, Excel is now a complete programming language in its own right. And Microsoft recently released Power Fx as an Excel-based low-code programming language; essentially, it’s Excel equations with something that looks like a web application replacing the 2D spreadsheet.

Making Excel a 2D language accomplished two things: it gave users the ability to format simple tables, which they really cared about; and it enabled them to think in columns and rows. That’s not sophisticated, but it’s very, very useful. Excel gave a new group of people the ability to use computers effectively. It’s been too long since we’ve used the phrase “become creative,” but that’s exactly what Excel did: it helped more people to become creative. It created a new generation of “citizen programmers” who never saw themselves as programmers—just more effective users.

That’s what we should expect of a low-code language. It isn’t about the amount of code. It’s about extending the ability to create to more people by changing paradigms (1D to 2D), eliminating hard parts (like formatting), and limiting what can be done to what most users need to do. This is democratizing.

UML

UML (Unified Modeling Language) was a visual language for describing the design of object oriented systems. UML was often misused by programmers who thought that UML diagrams somehow validated a design, but it gave us something that we didn’t have, and arguably needed: a common language for scribbling software architectures on blackboards and whiteboards. The architects who design buildings have a very detailed visual language for blueprints: one kind of line means a concrete wall, another wood, another wallboard, and so on. Programmers wanted to design software with a visual vocabulary that was equally rich.

It’s not surprising that vendors built products to compile UML diagrams into scaffolds of code in various programming languages. Some went further to add an “action language” that turned UML into a complete programming language in its own right. As a visual language, UML required different kinds of tools: diagram editors, rather than text editors like Emacs or vi (or Visual Studio). In modern software development processes, you’d also need the ability to check the UML diagrams themselves (not the generated code) into some kind of source management system; i.e., the important artifact is the diagram, not something generated from the diagram. But UML proved to be too complex and heavyweight. It tried to be everything to everybody: both a standard notation for high-level design and visual tool for building software. It’s still used, though it has fallen out of favor.

Did UML give anyone a new way of thinking about programming? We’re not convinced that it did, since programmers were already good at making diagrams on whiteboards. UML was of, by, and for engineers, from the start. It didn’t have any role in democratization. It reflected a desire to standardize notations for high-level design, rather than rethink it. Excel and other spreadsheets enabled more people to be creative with computers; UML didn’t.

LabVIEW

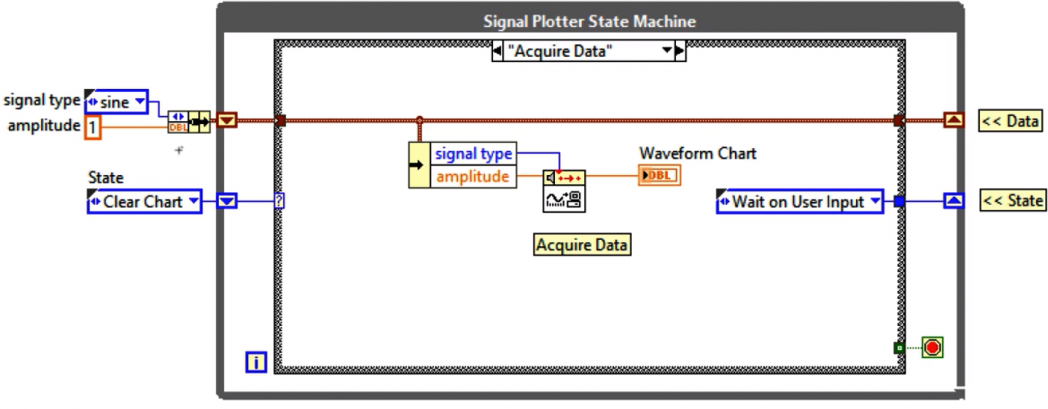

LabVIEW is a commercial system that’s widely used in industry—primarily in research & development—for data collection and automation. The high-school FIRST Robotics program depends heavily on it. The visual language that LabVIEW is built on is called G, and doesn’t have a textual representation. The dominant metaphor for G is a control panel or dashboard (or possibly an entire laboratory). Inputs are called “controls”; outputs are called “indicators.” Functions are “virtual instruments,” and are connected to each other by “wires.” G is a dataflow language, which means that functions run as soon as all their inputs are available; it is inherently parallel.

It’s easy to see how a non-programmer could create software with LabVIEW doing nothing more than connecting together virtual instruments, all of which come from a library. In that sense, it’s democratizing: it lets non-programmers create software visually, thinking only about where the data comes from and where it needs to go. And it lets hardware developers build abstraction layers on top of FPGAs and other low-level hardware that would otherwise have to be programmed in languages like Verilog or VHDL. At the same time, it is easy to underestimate the technical sophistication required to get a complex system working with LabVIEW. It is visual, but it isn’t necessarily simple. Just as in Fortran or Python, it’s possible to build complex libraries of functions (“virtual instruments”) to encapsulate standard tasks. And the fact that LabVIEW is visual doesn’t eliminate the need to understand, in depth, the task you’re trying to automate, and the hardware on which you’re automating it.

As a purely visual language, LabVIEW doesn’t play well with modern tools for source control, automated testing, and deployment. Still, it’s an important (and commercially successful) step away from the traditional programming paradigm. You won’t see lines of code anywhere, just wiring diagrams (Figure 2). Like Excel, LabVIEW provides a different way of thinking about programming. It’s still code, but it’s a different kind of code, code that looks more like circuit diagrams than punch cards.

Copilot

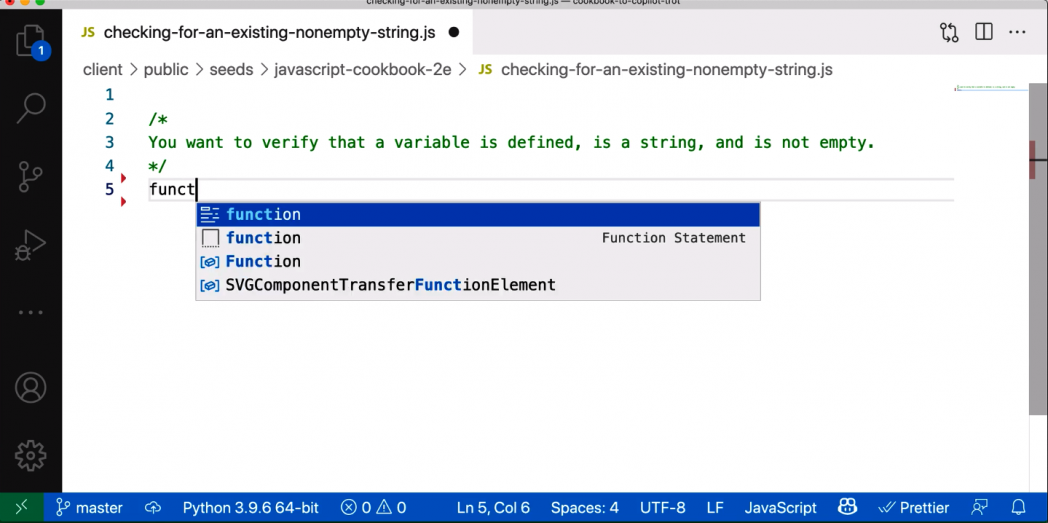

There has been a lot of research on using AI to generate code from human descriptions. GPT-3 has made that work more widely visible, but it’s been around for a while, and it’s ongoing. We’ve written about using AI as a partner in pair programming. While we were writing this report, Microsoft, OpenAI, and GitHub announced the first fruit of this research: Copilot, an AI tool that was trained on all the public code in GitHub’s codebase. Copilot makes suggestions while you write code, generating function bodies based on descriptive comments (Figure 3). Copilot turns programming on its head: rather than writing the code first, and adding comments as an afterthought, start by thinking carefully about the problem you want to solve and describing what the components need to do. (This inversion has some similarities to test-driven and behavior-driven development.)

Still, this approach begs the question: how much work is required to find a description that generates the right code? Could technology like this be used to build a real-world project, and if so, would that help to democratize programming? It’s a fair question. Programming languages are precise and unambiguous, while human languages are by nature imprecise and ambiguous. Will compiling human language into code require a significant body of rules to make it, essentially, a programming language in its own right? Possibly. But on the other hand, Copilot takes on the burden of remembering syntax details, getting function names right, and many other tasks that are fundamentally just memory exercises.

Salvatore Sanfilippo (@antirez) touched on this in a Twitter thread, saying “Every task Copilot can do for you is a task that should NOT be part of modern programming.” Copilot doesn’t just free you from remembering syntax details, what functions are stashed in a library you rarely use, or how to implement some algorithm that you barely remember. It eliminates the boring drudgery of much of programming—and, let’s admit it, there’s a lot of that. It frees you to be more creative, letting you think more carefully about that task you’re doing, and how best to perform it. That’s liberating—and it extends programming to those who aren’t good at rote memory, but who are experts (“subject matter experts”) in solving particular problems.

Copilot is in its very early days; it’s called a “Technical Preview,” not even a beta. It’s certainly not problem-free. The code it generates is often incorrect (though you can ask it to create any number of alternatives, and one is likely to be correct). But it will almost certainly get better, and it will probably get better fast. When the code works, it’s often low-quality; as Jeremy Howard writes, language models reflect an average of how people use language, not great literature. Copilot is the same. But more importantly, as Howard says, most of a programmer’s work isn’t writing new code: it’s designing, debugging, and maintaining code. To use Copilot well, programmers will have to realize the trade-off: most of the work of programming won’t go away. You will need to understand, at a higher level, what you’re trying to do. For Sanfilippo, and for most good or great programmers, the interesting, challenging part of programming comes in that higher-level work, not in slinging curly braces.

By reducing the labor of writing code, allowing people to focus their effort on higher-level thought about what they want to do rather than on syntactic correctness, Copilot will certainly make creative computing possible for more people. And that’s democratization.

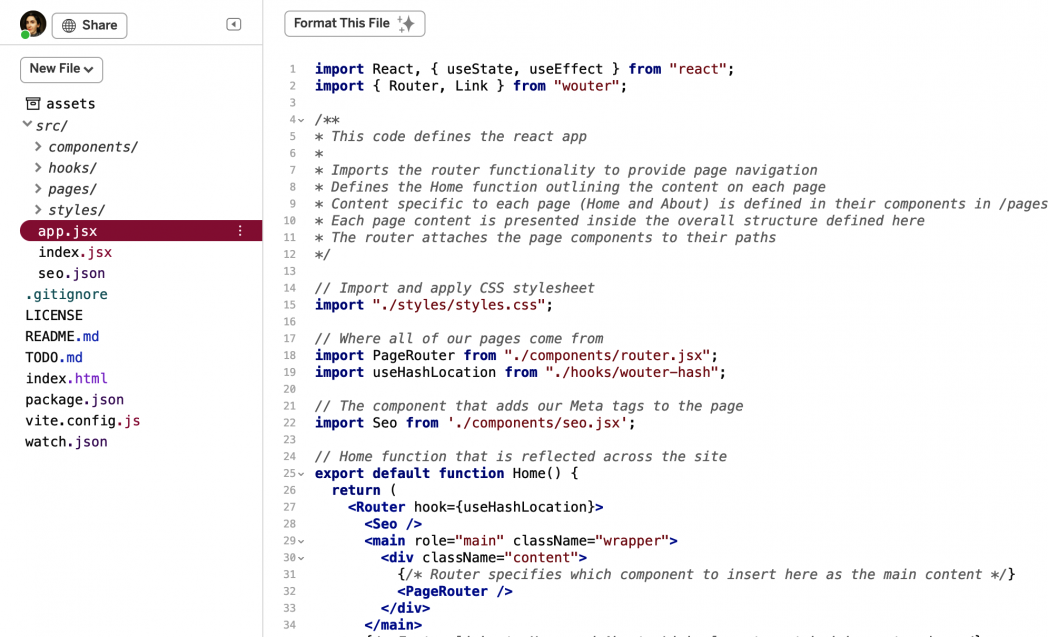

Glitch

Glitch, which has become a compelling platform for developing web applications, is another alternative. Glitch claims to return to the copy/paste model from the early days of web development, when you could “view source” for any web page, copy it, and make any changes you want. That model doesn’t eliminate code, but offers a different approach to understanding coding. It reduces the amount of code you write; this in itself is democratizing because it enables more people to accomplish things more quickly. Learning to program isn’t fun if you have to work for six months before you can build something you actually want. It gets you interacting with code that’s already written and working from the start (Figure 4); you don’t have to stare at a blank screen and invent all the technology you need for the features you want. And it’s completely portable: Glitch code is just HTML, CSS, and JavaScript stored in a GitHub archive. You can take that code, modify it, and deploy it anywhere; you’re not stuck with Glitch’s proprietary app. Anil Dash, Glitch’s CEO, calls this “Yes code”, affirming the importance of code. Great artists steal from each other, and so do the great coders; Glitch is a platform that facilitates stealing, in all the best ways.

Forms and Templates

Finally, many low-code platforms make heavy use of forms. This is particularly common among business intelligence (BI) platforms. You could certainly argue that filling in a form isn’t low-code at all, it’s just using a canned app; but think about what’s happening. The fields in the form are typically a template for filling in a complex SQL statement. A relational database executes that statement, and the results are formatted and displayed for the users. This is certainly democratizing: SQL expertise isn’t expected of most managers—or, for that matter, of most programmers. BI applications unquestionably allow people to do what they couldn’t do otherwise. (Anyone at O’Reilly can look up detailed sales data in O’Reilly’s BI system, even those of us who have never learned SQL or written programs in any language.) Painlessly formatting the results, including visualizations, is one of the qualities that made Excel revolutionary.

Similarly, low-code platforms for building mobile and web apps—such as Salesforce, Webflow, Honeycode, and Airtable—provide non-programmers with drag-and-drop solutions for creating everything from consumer-facing apps to internal workflows via templated approaches and purport to be customizable, but are ultimately finite based on the offerings and capabilities of each particular platform.

But do these templating approaches really allow a user to become creative? That may be the more important question. Templates arguably don’t. They allow the user to create one of a number (possibly a large number) of previously defined reports. But they rarely allow a user to create a new report without significant programming skills. In practice, regardless of how simple it may be to create a report, most users don’t go out of their way to create new reports. The problem isn’t that templating approaches are “ultimately finite”—that trade-off of limitations against ease comes with almost any low-code approach, and some template builders are extremely flexible. It’s that, unlike Excel, and unlike LabVIEW, and unlike Glitch, these tools don’t really offer new ways to think about problems.

It’s worth noting—in fact, it’s absolutely essential to note—that these low-code approaches rely on huge amounts of traditional code. Even LabVIEW—it may be completely visual, but LabVIEW and G were implemented in a traditional programming language. What they’re really doing is allowing people with minimal coding skills to make connections between libraries. They enable people to work by connecting things together, rather than building the things that are being connected. That will turn out to be very important, as we’ll start to examine next.

Rethinking the Programmer

Programmers have cast themselves as gurus and rockstars, or as artisans, and to a large extent resisted democratization. In the web space, that has been very explicit: people who use HTML and CSS, but not sophisticated JavaScript, are “not real programmers.” It’s almost as if the evolution of the web from a Glitch-like world of copy and paste towards complex web apps took place with the intention of forcing out the great unwashed, and creating an underclass of coding-disabled.

Low-code and no-code are about democratization, about extending the ability to be creative with computers and creating new citizen programmers. We’ve seen that it works in two ways: on the low end (as with Excel), it allows people with no formal programming background to perform computational tasks. Perhaps more significantly, Excel (and similar tools) allow a user to gradually work up the ladder to more complex tasks: from simple formatting to spreadsheets that do computation, to full-fledged programming.

Can we go further? Can we enable subject matter experts to build sophisticated applications without needing to communicate their understanding to a group of coders? At the Strata Data Conference in 2019, Jeremy Howard discussed an AI application for classifying burns. This deep-learning application was trained by a dermatologist—a subject matter expert—who had no knowledge of programming. All the major cloud providers have services for automating machine learning, and there’s an ever-increasing number of AutoML tools that aren’t tied to a specific provider. Eliminating the knowledge transfer between the SME and the programmer by letting SMEs build the application themselves is the shortest route to building better software.

On the high end, the intersection between AI and programming promises to make skilled programmers more productive by making suggestions, detecting bugs and vulnerabilities, and writing some of the boilerplate code itself. IBM is trying to use AI to automate translations between different programming languages; we’ve already mentioned Microsoft’s work on generating code from human-language descriptions of programming tasks, culminating with their Copilot project. This technology is still in the very early days, but it has the potential to change the nature of programming radically.

These changes suggest that there’s another way of thinking about programmers. Let’s borrow the distinction between “blue-” and “white”-collar workers. Blue-collar programmers connect things; white-collar programmers build the things to be connected. This is similar to the distinction between the person who installs or connects household appliances and the person who designs them. You wouldn’t want your plumber designing your toilet; but likewise, you wouldn’t want a toilet designer (who wears a black turtleneck and works in a fancy office building) to install the toilet they designed.

This model is hardly a threat to the industry as it’s currently institutionalized. We will always need people to connect things; that’s the bulk of what web developers do now, even those working with frameworks like React.js. In practice, there has been—and will continue to be—a lot of overlap between the “tool designer” and “tool user” roles. That won’t change. The essence of low-code is that it allows more people to connect things and become creative. We must never undervalue that creativity, but likewise, we have to understand that more people connecting things—managers, office workers, executives—doesn’t reduce the need for professional tools, any more than the 3D printers reduced the need for manufacturing engineers.

The more people who are capable of connecting things, the more things need to be connected. Programmers will be needed to build everything from web widgets to the high-level tools that let citizen programmers do their work. And many citizen programmers will see ways for tools to be improved or have ideas about new tools that will help them become more productive, and will start to design and build their own tools.

Rethinking Programmer Education

Once we make the distinction between blue- and white-collar programmers, we can talk about what kinds of education are appropriate for the two groups. A plumber goes to a trade school and serves an apprenticeship; a designer goes to college, and may serve an internship. How does this compare to the ways programmers are educated?

As complex as modern web frameworks like React.js may be (and we suspect they’re a very programmerly reaction against democratization), you don’t need a degree to become a competent web developer. The educational system is beginning to shift to take this into account. Boot camps (a format probably originating with Gregory Brown’s Ruby Mendicant University) are the programmer’s equivalent of trade schools. Many boot camps facilitate internships and initial jobs. Many students at boot camps already have degrees in a non-technical field, or in a technical field that’s not related to programming.

Computer science majors in colleges and universities provide the “designer” education, with a focus on theory and algorithms. Artificial intelligence is a subdiscipline that originated in academia, and is still driven by academic research. So are disciplines like bioinformatics, which straddles the boundaries between biology, medicine, and computer science. Programs like Data Carpentry and Software Carpentry (two of the three organizations that make up “The Carpentries”) cater specifically to graduate students who want to improve their data or programming skills.

This split matches a reality that we’ve always known. You’ve never needed a four-year computer science degree to get a programming job; you still don’t. There are many, many programmers who are self-taught, and some startup executives who never entered college (let alone finished it); as one programmer who left a senior position to found a successful startup once said in conversation, “I was making too much money building websites when I was in high school.” No doubt some of those who never entered college have made significant contributions in algorithms and theory.

Boot camps and four-year institutions both have weaknesses. Traditional colleges and universities pay little attention to the parts of the job that aren’t software development: teamwork, testing, agile processes, as well as areas of software development that are central to the industry now, such as cloud computing. Students need to learn how to use databases and operating systems effectively, not design them. Boot camps, on the other hand, range from the excellent to the mediocre. Many go deep on a particular framework, like Rails or React.js, but don’t give students a broader introduction to programming. Many engage in ethically questionable practices around payment (boot camps aren’t cheap) and job placement. Picking a good boot camp may be as difficult as choosing an undergraduate college.

To some extent, the weaknesses of boot camps and traditional colleges can be helped through apprenticeships and internships. However, even that requires care: many companies use the language of the “agile” and CI/CD, but have only renamed their old, ineffective processes. How can interns be placed in positions where they can learn modern programming practices, when the companies in which they’re placed don’t understand those practices? That’s a critical problem, because we expect that trained programmers will, in effect, be responsible for bringing these practices to the low-code programmers.

Why? The promise is that low-code allows people to become productive and creative with little or no formal education. We aren’t doing anyone a service by sneaking educational requirements in through the back door. “You don’t have to know how to program, but you do have to understand deployment and testing”—that misses the point. But that’s also essential, if we want software built by low-code developers to be reliable and deployable—and if software created by citizen programmers can’t be deployed, “democratization” is a fraud. That’s another place where professional software developers fit in. We will need people who can create and maintain the pipelines by which software is built, tested, archived, and deployed. Those tools already exist for traditional code-heavy languages; but new tools will be needed for low-code frameworks. And the programmers who create and maintain those tools will need to have experience with current software development practices. They will become the new teachers, teaching everything about computing that isn’t coding.

Education doesn’t stop there; good professionals are always learning. Acquiring new skills will be a part of both the blue-collar and white-collar programmer experience well beyond the pervasiveness of low-code.

Rethinking the Industry

If programmers change, so will the software industry. We see three changes. In the last 20 years, we’ve learned a lot about managing the software development process. That’s an intentionally vague phrase that includes everything from source management (which has a history that goes back to the 1970s) to continuous deployment pipelines. And we have to ask: if useful work is coming from low-code developers, how do we maintain that? What does GitHub for Excel, LabVIEW, or GPT-3 look like? When something inevitably breaks, what will debugging and testing look like when dealing with low-code programs? What does continuous delivery mean for applications written with SAP or PageMaker? Glitch, Copilot, and Microsoft’s Power Fx are the only low-code systems we’ve discussed that can answer this question right now. Glitch fits into CI/CD practice because it’s a system for writing less code, and copying more, so it’s compatible with our current tooling. Likewise, Copilot helps you write code in a traditional programming language that works well with CI/CD tools. Power Fx fits because it’s a traditional text-based language: Excel formulas without the spreadsheet. (It’s worth noting that Excel’s .xlsx files aren’t amenable to source control, nor do they have great tools for debugging and testing, which are a standard part of software development.) Extending fundamental software development practices like version control, automated testing, and continuous deployment to other low-code and no-code tools sounds like a job for programmers, and one that’s still on the to-do list.

Making tool designers and builders more effective will undoubtedly lead to new and better tools. That almost goes without saying. But we hope that if coders become more effective, they will spend more time thinking about the code they write: how it will be used, what problems are they trying to solve, what are the ethical questions these problems raise, and so on. This industry has no shortage of badly designed and ethically questionable products. Rather than rushing a product into release without considering its implications for security and safety, perhaps making software developers more effective will let them spend more time thinking about these issues up front, and during the process of software development.

Finally, an inevitable shift in team structure will occur across the industry, allowing programmers to focus on solving with code what low-code solutions can’t solve, and ensuring that what is solved through low-code solutions is carefully monitored and corrected. Just as spreadsheets can be buggy and an errant decimal or bad data point can sink businesses and economies, buggy low-code programs built by citizen programmers could just as easily cause significant headaches. Collaboration—not further division—between programmers and citizen programmers within a company will ensure that low-code solutions are productive, not disruptive as programming becomes further democratized. Rebuilding teams with this kind of collaboration and governance in mind could increase productivity for companies large and small—affording smaller companies who can’t afford specialization the ability to diversify their applications, and allowing larger companies to build more impactful and ethical software.

Rethinking Code Itself

Still, when we look at the world of low-code and no-code programming, we feel a nagging disappointment. We’ve made great strides in producing libraries that reduce the amount of code programmers need to write; but it’s still programming, and that’s a barrier in itself. We’ve seen limitations in other low-code or no-code approaches; they’re typically “no code until you need to write code.” That’s progress, but only progress of a sort. Many of us would rather program in Python than in PL/I or Fortran, but that’s a difference of quality, not of kind. Are there any ways to rethink programming at a fundamental level? Can we ever get beyond 80-character lines that, no matter how good our IDEs and refactoring tools might be, are really just virtual punch cards?

Here are a few ideas.

Brett Victor’s Dynamicland represents a complete rethinking of programming. It rejects the notion of programming with virtual objects on laptop screens; it’s built upon the idea of working with real-world objects, in groups, without the visible intermediation of computers. People “play” with objects on a tabletop; sensors detect and record what they’re doing with the objects. The way objects are arranged become the programs. It’s more like playing with Lego blocks (in real life, not some virtual world), or with paper and scissors, than the programming that we’ve become accustomed to. And the word “play” is important. Dynamicland is all about reenvisioning computing as play rather than work. It’s the most radical attempt at no-code programming that we’ve seen.

Dynamicland is a “50-year project.” At this point, we’re 6 years in: only at the beginning. Is it the future? We’ll see.

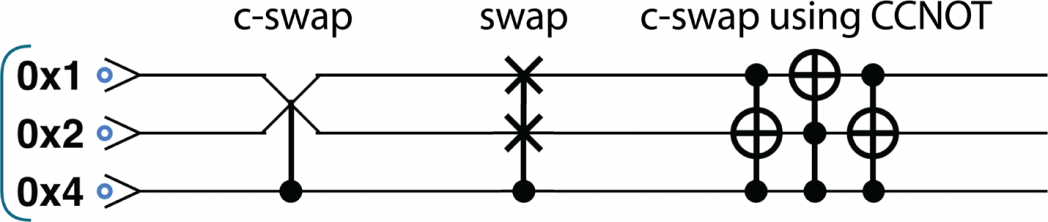

If you’ve followed quantum computing, you may have seen quantum circuit notation (shown in Figure 5), a way of writing quantum programs that looks sort of like music: a staff composed of lines representing qubits, with operations connecting those lines. We’re not going to discuss quantum programming; we find this notation suggestive for other reasons. Could it represent a different way to look at the programming enterprise? Kevlin Henney has talked about programming as managing space and time. Traditional programming languages are (somewhat) good about space; languages like C, C++, and Java require you to define datatypes and data structures. But we have few tools for managing time, and (unsurprisingly) it’s hard to write concurrent code. Music is all about time management. Think of a symphony and the 100 or so musicians as independent “threads” that have to stay synchronized—or think of a jazz band, where improvisation is central, but synchronization remains a must. Could a music-aware notation (such as Sonic Pi) lead to new ways for thinking about concurrency? And would such a notation be more approachable than virtual punch cards? This rethinking will inevitably fail if it tries too literally to replicate staves, note values, clefs and such; but it may be a way to free ourselves from thinking about business as usual.

Here’s an even more radical thought. At an early Biofabricate conference, a speaker from Microsoft was talking about tools for programming DNA. He said something mind-blowing: we often say that DNA is a “programming language,” but it has control structures that are unlike anything in our current programming languages. It’s not clear that those programming structures are representable in a text. Our present notion of computation—and, for that matter, of what’s “computable”—derives partly from the Turing machine (a thought experiment) and Von Neumann’s notion of how to build such a machine. But are there other kinds of machines? Quantum computing says so; DNA says so. What are the limits of our current understanding of computing, and what kinds of notation will it take to push beyond those limits?

Finally, programming has been dominated by English speakers, and programming languages are, with few exceptions, mangled variants of English. What would programming look like in other languages? There are programming languages in a number of non-English languages, including Arabic, Chinese, and Amharic. But the most interesting is the Cree# language, because it isn’t just an adaptation of a traditional programming language. Cree# tries to reenvision programming in terms of the indigenous American Cree culture, which revolves around storytelling. It’s a programming language for stories, built around the logic of stories. And as such, it’s a different way of looking at the world. That way of looking at the world might seem like an arcane curiosity (and currently Cree# is considered an “esoteric programming language”); but one of the biggest problems facing the artificial intelligence community is developing systems that can explain the reason for a decision. And explanation is ultimately about storytelling. Could Cree# provide better ways of thinking about algorithmic explainability?

Where We’ve Been and Where We’re Headed

Does a new way of programming increase the number of people who are able to be creative with computers? It has to; in “The Rise of the No Code Economy”, the authors write that relying on IT departments and professional programmers is unsustainable. We need to enable people who aren’t programmers to develop the software they need. We need to enable people to solve their own computational problems. That’s the only way “digital transformation” will happen.

We’ve talked about digital transformation for years, but relatively few companies have done it. One lesson to take from the COVID pandemic is that every business has to become an online business. When people can’t go into stores and restaurants, everything from the local pizza shop to the largest retailers needs to be online. When everyone is working at home, they are going to want tools to optimize their work time. Who is going to build all that software? There may not be enough programming talent to go around. There may not be enough of a budget to go around (think about small businesses that need to transact online). And there certainly won’t be the patience to wait for a project to work its way through an overworked IT department. Forget about yesterday’s arguments over whether everyone should learn to code. We are entering a business world in which almost everyone will need to code—and low-, no-, and yes-code frameworks are necessary to enable that. To enable businesses and their citizen programmers to be productive, we may see a proliferation of DSLs: domain-specific languages designed to solve specific problems. And those DSLs will inevitably evolve towards general purpose programming languages: they’ll need web frameworks, cloud capabilities, and more.

“Enterprise low-code” isn’t all there is to the story. We also have to consider what low-code means for professional programmers. Doing more with less? We can all get behind that. But for professional programmers, “doing more with less” won’t mean using a templating engine and a drag-and-drop interface builder to create simple database applications. These tools inevitably limit what’s possible—that’s precisely why they’re valuable. Professional programmers will be needed to do what the low-code users can’t. They build new tools, and make the connections between these tools and the old tools. Remember that the amount of “glue code” that connects things rises as the square of the number of things being connected, and that most of the work involved in gluing components together is data integration, not just managing formats. Anyone concerned about computing jobs drying up should stop worrying; low-code will inevitably create more work, rather than less.

There’s another side to this story, though: what will the future of programming look like? We’re still working with paradigms that haven’t changed much since the 1950s. As Kevlin Henney pointed out in conversation, most of the trendy new features in programming languages were actually invented in the 1970s: iterators, foreach loops, multiple assignment, coroutines, and many more. A surprising number of these go back to the CLU language from 1975. Will we continue to reinvent the past, and is that a bad thing? Are there fundamentally different ways to describe what we want a computer to do, and if so, where will those come from? We started with the idea that the history of programming was the history of “less code”: finding better abstractions, and building libraries to implement those abstractions—and that progress will certainly continue. It will certainly be aided by tools like Copilot, which will enable subject matter experts to develop software with less help from professional programmers. AI-based coding tools might not generate “less” code–but humans won’t be writing it. Instead, they’ll be thinking and analyzing the problems that they need to solve.

But what happens next? A tool like Copilot can handle a lot of the “grunt work” that’s part of programming, but it’s (so far) built on the same set of paradigms and abstractions. Python is still Python. Linked lists and trees are still linked lists and trees, and getting concurrency right is still difficult. Are the abstractions we inherited from the past 70 years adequate to a world dominated by artificial intelligence and massively distributed systems?

Probably not. Just as the two-dimensional grid of a spreadsheet allows people to think outside the box defined by lines of computer code, and just as the circuit diagrams of LabVIEW allow engineers to envision code as wiring diagrams, what will give us new ways to be creative? We’ve touched on a few: musical notation, genetics, and indigenous languages. Music is important because musical scores are all about synchronization at scale; genetics is important because of control structures that can’t be represented by our ancient IF and FOR statements; and indigenous languages help us to realize that human activity is fundamentally about stories. There are, no doubt, more. Is low-code the future—a “better abstraction”? We don’t know, but it will almost certainly enable different code.

We would like to thank the following people whose insight helped inform various aspects of this report: Daniel Bryant, Anil Dash, Paul Ford, Kevlin Henney, Danielle Jobe, and Adam Olshansky.